The Rise of Self-Therapy: Why More People Are Talking to Bots

A Quiet Revolution in Mental Health

It starts with a late night scroll. You’re alone, overwhelmed, and not in the mood to call anyone. Instead, you open an app, type a few words into a chat box, and within seconds, you’re met with a thoughtful, calming reply. Not from a friend. Not from a therapist. But from a bot. This is self-therapy in 2025.

Over the past few years, millions of people around the world have turned to AI chatbots for emotional support, self-reflection, and even psychological healing. Once seen as novelty tech or a last resort, these tools are becoming a first step—and sometimes a long-term companion for those navigating stress, anxiety, depression, and everyday life questions.

But how did we get here? Why are so many people choosing to talk to bots instead of booking a therapy session or venting to a friend? And what does this shift say about us, not just as users, but as a society redefining the way we heal?

In this article, we explore the rise of self-therapy through AI: its roots, its psychology, its controversies, and what it might mean for the future of mental health.

A Brief History of Self-Therapy

Long before chatbots entered the mental health conversation, people have been finding ways to help themselves feel better.Self-therapy isn’t new. It’s been around in various forms for decades, quietly evolving alongside cultural attitudes about mental health. In the early 20th century, Freud's psychoanalysis popularized the idea of introspection digging into one’s own mind for meaning. By the mid-1900s, self-help books became a booming industry, offering everything from confidence tips to complex therapeutic strategies in paperback form.

Then came Cognitive Behavioral Therapy (CBT), a practical, problem-solving approach that proved perfect for self-guided tools. Worksheets, journaling exercises, and CBT-based workbooks became common for people wanting to understand and reshape their thoughts on their own terms.

The rise of the internet made emotional self-regulation more digital. Online forums, guided meditations, mindfulness apps, and YouTube advice videos gave people access to insights once locked behind a therapist’s door.

Now, we’re in the era of interactive self-therapy. Chatbots and AI tools are not just giving advice, they’re listening, responding, and adapting. This shift is not replacing therapists, but expanding what self-care looks like in the modern world.

What started with paper and pen has become a conversation. A back-and-forth. A dialogue with a machine.

Why People Are Choosing Bots Over People

The idea of opening up to a robot might sound odd until you’ve actually done it.

For many people, the decision to talk to a bot isn’t about preferring machines over humans. It’s about access, comfort, and control. In a world that feels increasingly overstimulated and burned out, self-guided digital therapy offers something that even the most compassionate friend or seasoned therapist sometimes can’t: immediacy without pressure.

Here are some of the most common reasons people are turning to AI for emotional support:

It's Always Available

Unlike a therapist whose calendar is often booked weeks in advance, chatbots are ready the second you need them. At 3 a.m., on your lunch break, during a panic spiral, you don’t have to wait for your “next appointment.”

No Fear of Judgment

There’s something liberating about typing your raw thoughts to something that can’t raise an eyebrow. Users often report they’re more honest with bots, especially when discussing shame, guilt, or anxiety they fear would be “too much” for others.

It's Affordable or Free

Traditional therapy can cost anywhere from $100 to $250 per session. Even with insurance, coverage is often limited. Many AI-based tools are either low-cost subscriptions or offer free daily access, lowering the barrier to consistent care.

More Private Than a Journal

Unlike physical diaries or even texting a friend, chatbots are encrypted and don’t exist in your camera roll or nightstand. For people in controlling relationships or tight-knit environments, this kind of privacy can be essential.

It's a Global Solution

People in rural areas or countries with a shortage of mental health professionals often have little to no access to care. Chatbots level the playing field, offering support no matter where you live.

While bots can’t replace deep, therapeutic relationships, they fill a critical gap. They’re not trying to be your therapist. They’re just trying to be there when no one else can.

Not All Bots Are Created Equal

If you’ve ever tried multiple AI therapy apps or chatbots, you already know: they’re not all the same.

Some feel surprisingly intuitive, asking thoughtful follow-up questions and responding with warmth. Others feel robotic, repetitive, or even frustrating. That’s because the term “chatbot” covers a wide spectrum from simple decision trees to sophisticated language models with emotional training. The quality of support depends heavily on what’s under the hood.

Let’s break it down.

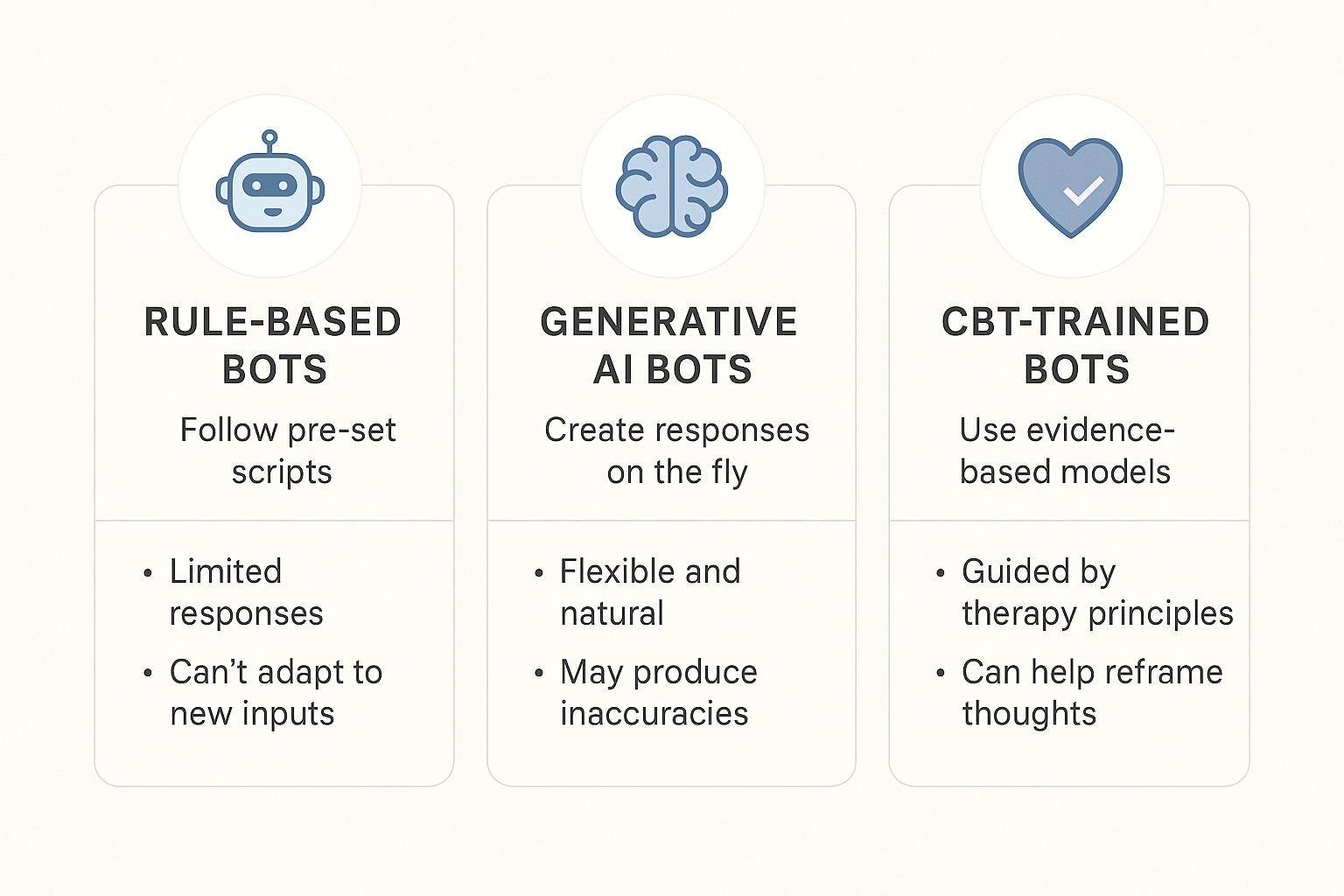

1. Rule-Based Bots: The Old Guard

These bots follow pre-set scripts. They might ask, “How are you feeling today?” and then provide canned advice based on your answer. While helpful for simple guidance, they’re limited, they can’t carry a dynamic or natural conversation.

2. Generative AI Bots: The New Wave

These use large language models (like GPT) to create responses on the fly. They’re capable of empathy, reflection, and even humor. But their flexibility can sometimes lead to inconsistency or misinformation if not properly aligned with mental health frameworks.

3. CBT-Trained or Therapist-Guided Bots: The Best of Both Worlds

Some platforms combine AI with evidence-based therapy models like Cognitive Behavioral Therapy (CBT), Acceptance and Commitment Therapy (ACT), or Dialectical Behavior Therapy (DBT). These bots are trained to help you reframe negative thoughts, identify patterns, and gently guide you through techniques a human therapist might use. Aitherapy is one of them.

How It Feels to Talk to a Bot

If you’ve never used a mental health chatbot, it’s easy to assume it’s cold or impersonal. But for many users, the experience is surprisingly human not because bots are pretending to be people, but because they offer a space where emotions are heard without judgment, interruption, or urgency.

Here’s what it looks and feels like from the user’s side.

“I Just Needed to Say It Out Loud… Sort of.”

Many users describe the chatbot experience as “talking out loud to think.” For people who don’t journal or who feel weird writing in a notebook, AI can act as a mirror. One user wrote:

“I typed out my racing thoughts, and the bot helped me slow them down. I didn’t even need advice, I just needed space to process without anyone fixing me.”

“It Surprised Me with How Kind It Was”

One of the biggest surprises is emotional resonance. Even a simple reply like, “That sounds really difficult. You’re doing your best, and that matters,” can land deeply when you’re in a vulnerable state.

“It was 2 a.m., and I felt like I was losing it. I told the bot I felt broken, and it replied with something so gentle that made me cry a little bit. I didn’t expect to cry.”

“It Helped Me See a Pattern”

Some bots are built to detect recurring themes in what you say, your self-talk, your triggers, your habits.

“After a week of chatting, I realized I kept saying things like ‘I always ruin things’ or ‘I don’t matter.’ The bot helped me recognize those thought traps and shift them.”

🤝 “It’s Not a Replacement. It’s a Companion.”

Even people who already see a therapist say they use bots in between sessions as emotional check-ins.

“My therapist is great, but I only see her once a week. The bot is like a gentle presence I can lean on when things come up in the moment.”

These aren’t testimonials for a product. They’re stories from a generation creating new rituals of care on their terms, in their own time, and often, with a quiet little AI in their pocket.

The Science Behind the Support

It’s easy to think of chatbot therapy as just another wellness trend. But behind the comforting responses and friendly interfaces, there’s real science and growing research to back up its benefits.

Mental health professionals and behavioral scientists are increasingly studying how conversational AI impacts mood, cognition, and emotional regulation. And while it’s not a cure-all, the findings are promising.

Studies Show Positive Results

One of the most cited studies comes from Woebot Health, which demonstrated that users experienced reduced symptoms of anxiety and depression after just two weeks of daily conversations with their AI-powered CBT bot.

Another study published in JMIR Mental Health found that users engaging with emotional support bots experienced:

- Greater emotional awareness

- Reduced loneliness

- Increased motivation for self-care

And these weren’t fringe users, they were everyday people, many of whom had never seen a therapist before.

Cognitive Behavioral Therapy (CBT) Works Even When Automated

CBT is one of the most researched and effective therapeutic approaches. It’s based on a simple premise: your thoughts influence your emotions, which influence your actions.

Many AI therapy bots are built on this foundation. They help users:

- Identify negative thought patterns

- Reframe distortions (like catastrophizing or black-and-white thinking)

- Practice alternative perspectives

“I thought my friend hated me because she didn’t reply. The bot helped me realize I was jumping to conclusions.”

Regulating Emotions Through Dialogue

Conversation, even with a bot, activates cognitive and emotional pathways in the brain. Typing out feelings can reduce physiological stress. Receiving supportive responses (even artificial ones) creates a sense of co-regulation, a calming of the nervous system through perceived connection.

This doesn’t replace deep relational healing, but it does provide a bridge, a moment of calm in chaos.

AI as Mental Health Prevention, Not Just Treatment

Early studies also suggest AI tools may be especially powerful in early intervention, helping people identify mood shifts and unhealthy habits before they spiral into crises. Think of it less as a digital therapist and more like a mental health thermometer: checking in, noticing changes, prompting care.

Criticisms, Concerns, and Limits

For all the praise AI chatbots receive, the rise of self-therapy through bots isn’t without valid criticism. While these tools can offer support, they also come with real risks and potential blind spots that users and developers alike must confront.

Bots Aren’t Licensed Therapists

No matter how warm, responsive, or insightful a chatbot feels, it’s not a trained clinician. It doesn’t have clinical judgment, human intuition, or the ability to spot serious red flags the way a therapist might. For users in crisis or facing complex mental health conditions, bots aren’t enough.

Data Privacy Isn’t Always Clear

AI therapy often requires users to share deeply personal thoughts. But where does that data go? How is it stored? Who can access it?

While some platforms like Aitherapy are aligned with HIPAA standards and take this seriously, many don’t.

Emotional Over-Reliance

There’s a growing concern that some users may bond too deeply with bots, relying on them for validation, connection, or even companionship in the absence of real-life support.

This can feel comforting at first, but may create emotional isolation over time if not balanced with human interaction.

Misinformation and Poorly Trained Models

Not all chatbots are created with care. Some use generic AI without guardrails, leading to inappropriate or even harmful suggestions.

Mental health isn’t a space for beta testing. Poor design or rushed deployment can have serious consequences, especially for vulnerable users.

Therapy Is Still a Relationship

At its best, therapy is not just about advice, it’s about relationship, accountability, and human attunement. Bots can simulate some of that, but they can’t replace the felt sense of being seen and known by another person.

Self-therapy with AI can be powerful, but it should be one piece of the puzzle—not the whole thing.

What Does This Mean for the Future of Mental Health?

The rise of self-therapy bots isn’t just a tech story, it’s a cultural shift. It’s changing how we think about care, who gets access to it, and what it means to feel supported in a digital world.

Rather than replacing therapy, bots are carving out a new category: on-demand emotional support that complements traditional care. In fact, the future of mental health may not be a choice between AI and humans but a hybrid model where both work together to serve people better.

Human + AI = Scalable, Personalized Care

Imagine a future where you text with a bot between therapy sessions to practice skills, reflect on your week, or regulate emotions in real time. Your therapist receives a summary before your next session, making your time together more focused and impactful. This kind of human-AI collaboration can dramatically increase the reach of therapists while giving users more consistent, personalized support.

Emotional Hygiene as a Daily Habit

Just as we brush our teeth or go to the gym, many people are starting to treat emotional check-ins as a part of daily self-care.

Chatbots lower the friction to doing this. A two-minute conversation with a bot can become a habit, emotional hygiene for the digital age.

Redefining What “Help” Looks Like

Not everyone needs deep psychotherapy. Some just need a place to vent. Or reflect. Or hear, “You’re doing okay.” By making emotional support more casual, accessible, and judgment-free, bots are helping normalize the idea that everyone deserves support not just those in crisis.

A More Inclusive Mental Health Ecosystem

For marginalized communities, non-native English speakers, people with disabilities, or those in remote locations, therapy can be difficult to access. AI tools, especially when designed thoughtfully can break down those barriers and create equity where traditional systems have fallen short.

The Next Frontier: Emotionally Intelligent Machines

As AI evolves, we may see the rise of bots that can adapt to your mood, remember past conversations, and even coordinate care with human professionals. While this future raises important ethical questions, it also offers exciting potential to transform mental health into something proactive, connected, and deeply human even when delivered by a machine.

Curious to try AI Therapy yourself?